Nikhef Site Report

Dennis van Dok

2019-03-26

Nikhef mission

The mission of the National Institute for Subatomic Physics Nikhef is to study the interactions and structure of all elementary particles and fields at the smallest distance scale and the highest attainable energy.

- Accelerator-based particle physics

- Astroparticle physics

Organisation

Personnel

Projects

LHC Experiments

Cosmic phenomena

Other Programs

- Theory programs

- Enabling programs

- Detector Research and Development

- Physics Data Processing

Computer technology

- Office automation

- Project support (detector software engineering)

- Scientific computing (a.k.a. Physics Data Processing or PDP)

People

New sysadmin in the PDP group: Mary Hester joined in Februari.

The Computer Technology group hired a new webmaster: Roel Roomeijer. joined the system administration team in March.

Physics Data Processing Group

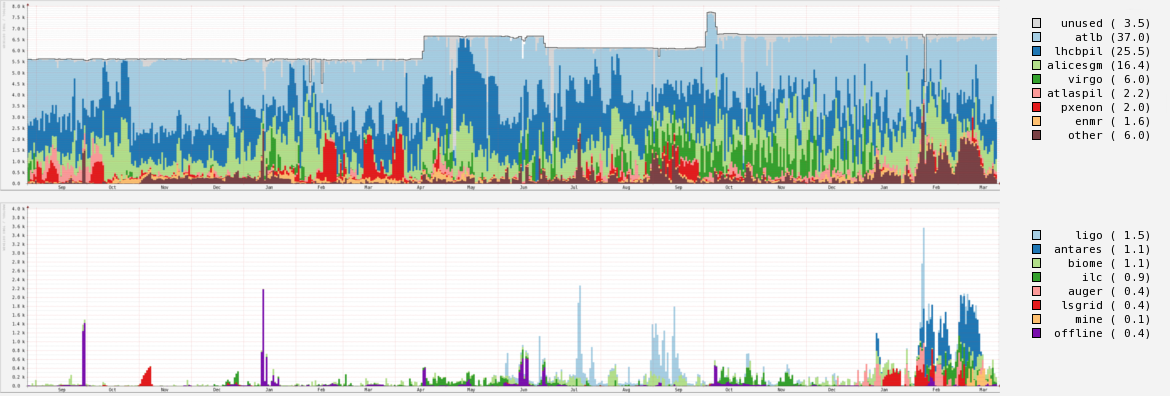

WLCG Tier-1

Nikhef-PDP is half of the Netherlands Tier-1.

The other half is SURFsara.

Together we also participate in the DNI, the Dutch National Infrastructure for data/compute intensive sciences.

- LOFAR (radio astronomy)

- Project MinE (genetic causes of motor neuron disease ALS)

- LIGO/VIRGO

Computing last year

SURFsara

(thanks to Onno Zweers)

- first non-HEP pilots for high throughput openstack platform;

- LOFAR download servers to be replaced with a solution based on dCache macaroons;

- SKA: started running network tests with Australia & South Africa;

- New storage dashboards for VOs;

- CEPH as backend underneath dCache (v4.2): so far, performance not yet production-ready.

New Hardware

AMD EPYC

81 DELL R6415 nodes (3 racks) with one AMD EPYC 7551P 32-Core Processor.

- Two racks for NIKHEF-ELPROD compute cluster.

- One rack replaces the local stoomboot cluster (more about that later).

Price/performance is good.

| CPU | HEPSPEC06/core | €/core |

|---|---|---|

| Intel(R) Xeon(R) Gold 6148 | 19.57 | 315 |

| AMD EPYC 7551P | 14.94 | 247 |

Tried both with and without hyperthreading. Seemed to provide little benefit so turned off now.

Single socket system doesn't suffer cache coherence penalty.

Fast 3.2 TB NVMe SSDs for local storage

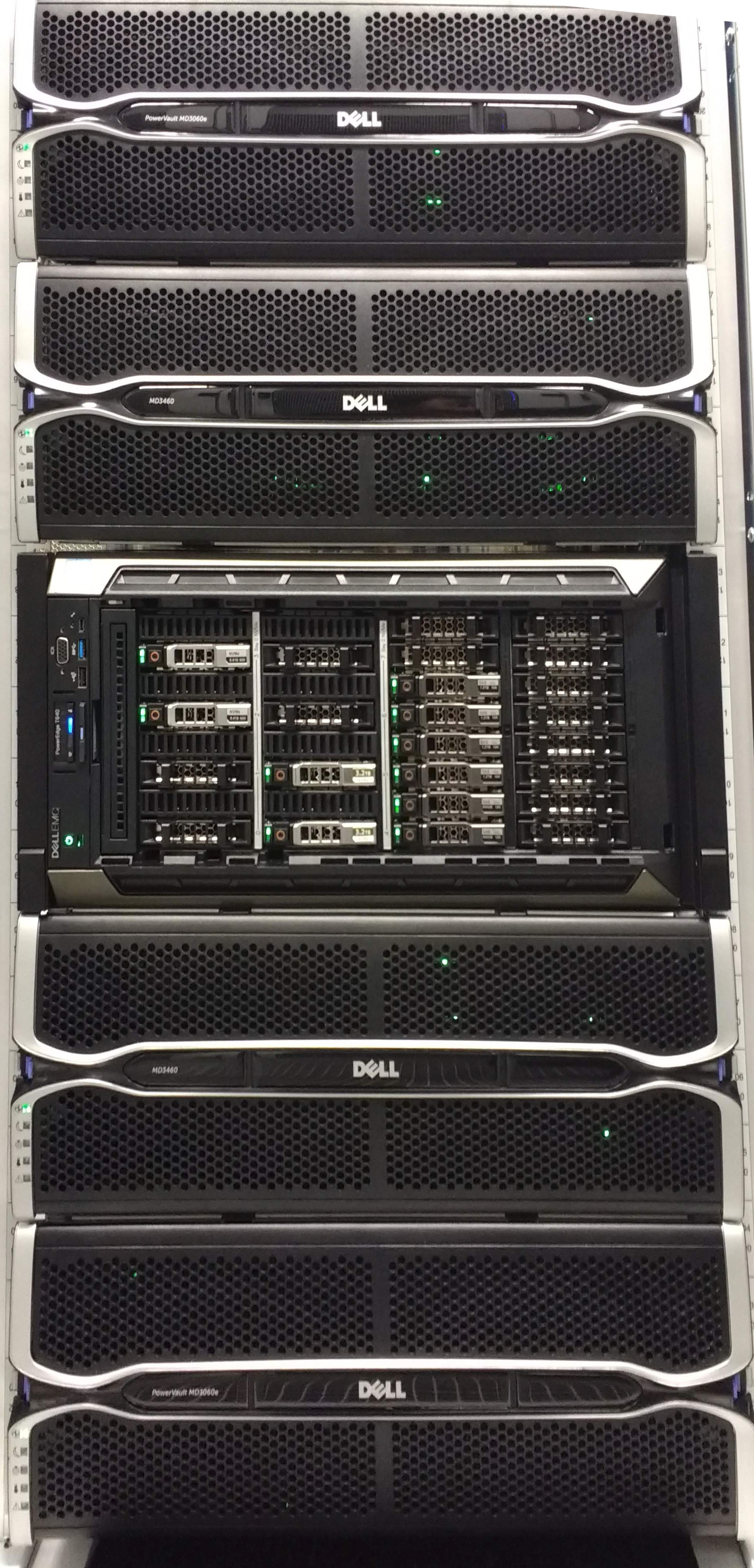

Grid storage

/data

Old Hitachi storage system replaced by Netapp.

- Initially tried a Fujitsu Eternus DX200, but this did not work because it could not handle LDAP+TLS.

- Currently a dual NetApp FAS8200 with 2× 300TB Disk and 2× 9TB SSD

- Redundancy but no back-up.

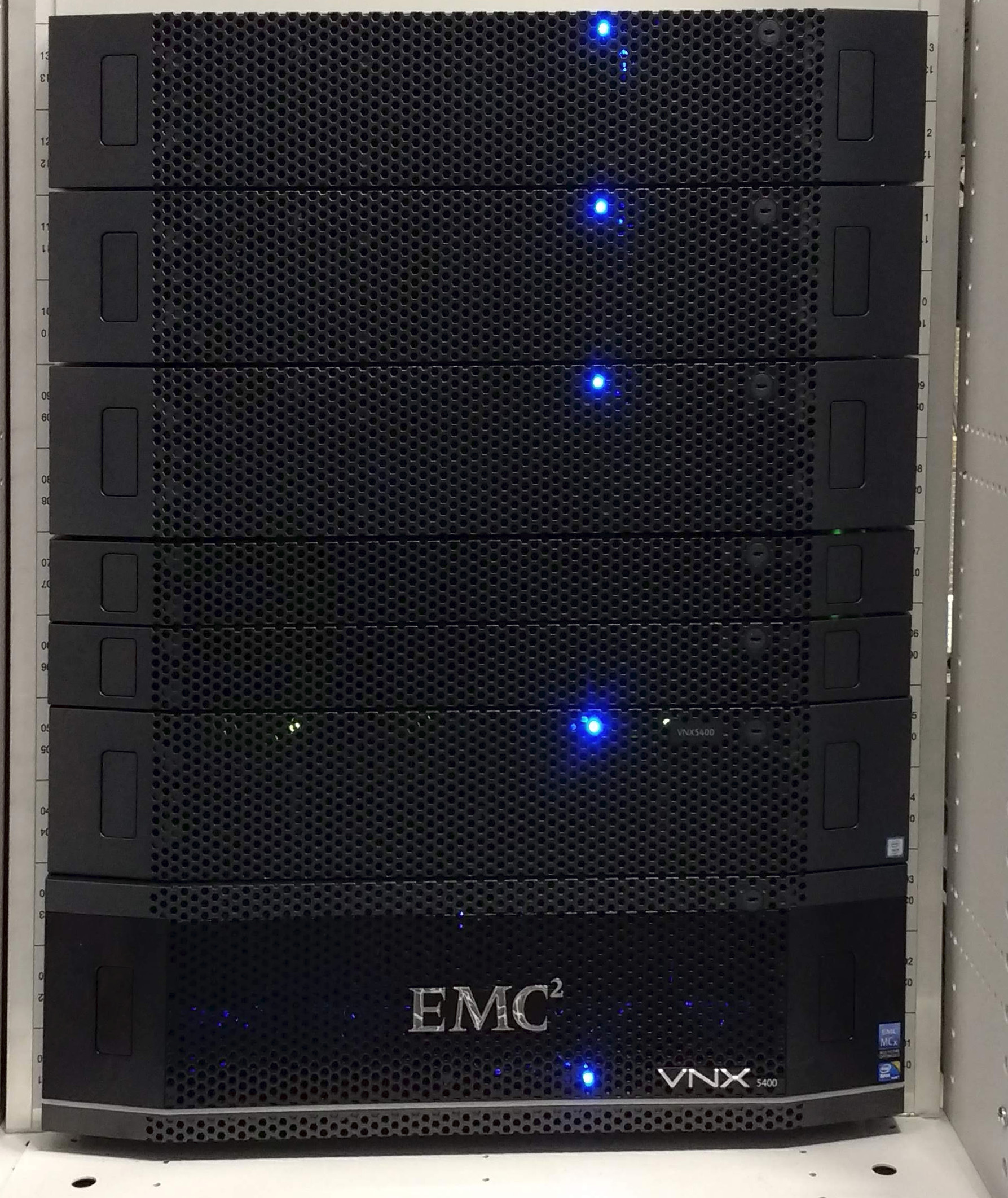

/project: EMC VNX5400

This was bought for the /project storage systems; important data that has an external backup. Data is available on Unix and Windows systems, so mixed mode of Unix permissions and Windows ACLs.

Not too many vendors offer this combination. In fact, the EMC is the last of its kind to offer it.

Off-site backup

Partnership with University of Groningen led to a geographically separated off-site backup solution.

Hardware and software managed and maintained by Groningen; component replacements done by local team.

Much more economical than the previous commercial offering.

Developments

Private cloud

Setting up a high-throughput private compute cloud with Openstack.

Project was not getting much traction. Several avenues were explored; lack of long-term stability in the software development played a role.

Currently given a higher priority due to internal demand for alternatives to classic cluster computing. Nikhef as a lab is trying agile as a way of managing projects and the cloud project is likewise approached as agile.

Salt configuration management

Progress has been made to bring more systems under salt's control. LDAP, DNS, dCache.

Integrated generation of Icinga checks.

Not replacing the legacy grid services which may not be with us much longer.

dCache with distributed NFS on local cluster

High-throughput clustered local file access.

Much appreciated by users

But…see below.

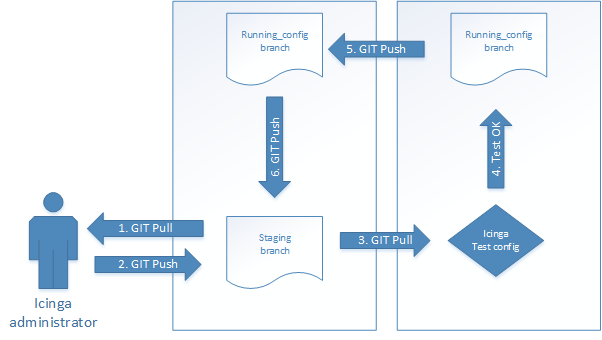

Icinga for IT Services

- Migration from Nagios to Icinga2

- Old Nagios managed by single person difficult to get new hosts and probes deployed.

- In the new system everyone should be able to add/edit probes for their hosts.

Standard Icinga2 install; configuration on Gitlab server; server will install and test new config automatically.

Interesting times

EMC ACL issues

Transferring ACLs from our older systems overloaded the write buffer which locked op the entire volume.

A workaround was eventually found but it took months for DELL EMC to have the correct engineer on the case and to come up with a good diagnosis and a workable solution.

The problem was reported in July, a resolution came in October.

Memory issues

A second issue involved the memory consumption of tcp NFS connections. These turned out to be significantly higher than for udp, leading to a system crash and downtime.

This issue took even longer to resolve; the initial problem was found in Spring 2018; the conclusion was that the machine was sold with the wrong specs. A solution was proposed in December which means that in April we will have a planned downtime for a system upgrade.

dCache NFS

- original cluster had 8 cores/node, and 1 Gbps

- new nodes have 25 Gbps and 32 cores

- Possible bugs in NFS client implementation in Linux kernel

- Possible bugs in distributed NFS in dCache

The result was too much traffic through the NFS door and hanging clients.

Resolution:

- temporarily reinstall part of cluster with SLC6

- disable dCache NFS access on other nodes

- stress test installation of dCache 5

- Planned upgrade in April

Future plans

Roll out a high-throughput cloud

The vision of this is still blurry. It is hard to get a good sense from the users what they would want. Finding a representative pilot community is important.

If possible, we will virtualize the current legacy grid capacity and explore its elasticity.

CREAM on the way out

Considering alternatives:

- ARC

- HTCondor (adopting HTCondor for more than just batch jobs seems to have benefits)

400 GB network tests

Talk to Tristan.

DPM/dCache

Trying to minimize the legacy Knowledge maintainance (technical debt); since dCache is now used at Nikhef, having both dCache and DPM in one lab makes no sense. We're going to phase out DPM and set up dCache for grid storage.

See you in Amsterdam

HEPiX Amsterdam, 14–18 October 2019