Motivation

The main purpose of this web page is to present my arguments as precisely as possible. In particular I want to differentiate between anthropic arguments involving the Standard Model structure and parameters, and the familiar anthropic argument for a small but non-zero cosmological constant. While

it the former kind of arguments are much harder to make precise, they also seem much harder to evade.

To put the recent developments in the proper perspective, I will start with a brief history of the idea of “uniqueness” of the laws of physics.

Uniqueness

One of the dreams of last century is that of a unique and unified theory of all interactions. Often these concepts were not clearly distinguished, and the word "unique" was omitted. How could a theory of all interactions be anything else than unique?

This dream finds it origin somewhere in the first half of last century and is often associated with Einstein's lifelong struggle to find a "Unified Field Theory". After discovering General Relativity, a theory based on simple and elegant principles, it was quite natural to assume that everything else should allow such a description as well. For a while, only one other interaction was known, Maxwell's theory of electromagnetism, which was also based on simple and elegant principles. Additional evidence was the fact that both theories were found to be based on the principle of local gauge invariance, and that they could be unified into a five-dimensional Kaluza-Klein theory. It must have seemed just a matter of getting the details right.

But then the first cracks in this concept began to show. There was experimental evidence for additional interactions, the strong and the weak force, which at first did not seem to have any of the simplicity and elegance of the other two. New particles were discovered, such as the muon, which seemed to have no obvious place in a unique theory. And there were many parameters, such as the electron/muon and electron/proton mass ratio and the fine structure constant, which defied any simple explanation in terms of simple mathematical expressions. The belief in some sort of uniqueness is exemplified by the fact that many people regarded the fine structure constant α as a fundamental number that should be calculable.

Not only experimentally, but also theoretically the idea of uniqueness was getting into trouble. The theory of electromagnetism could be viewed as a gauge theory of a phase, and one could plausibly imagine a phase as something unique. But after the work of Yang and Mills it became clear that the phase was just a special case of a gauge group, and that there was an infinity of other possibilities.

All this then led to the Standard Model, one of the milestones of last century physics. The description of all known non-gravitational interactions in terms of Yang-Mills theories restored the conceptual uniqueness that seemed lost for a while. But structurally, there is nothing unique about the standard model. Given the choice of gauge group and particle representations, for which no fundamental explanation is known, there are nineteen free parameters (ignoring neutrino masses). These parameters are not only incalculable within the standard model, in some cases they also have "unnaturally" small values.

The prevalent belief was that this could not be the end of the story. The Standard Model should be embedded in some other theory, and within that theory all of its parameters should then be calculable. In the seventies and eighties several ideas of this kind were tried, but with little success. All of these, such as technicolor, compositeness and various kinds of grand or less grand unification led to other gauge theories, suffering from the same fundamental non-uniqueness as the standard model gauge theory: choices of groups, spins and representations, and couplings have to be made. Furthermore, in most cases the resulting gauge theories are not even simpler than the standard model one.

The most popular of these ideas, SO(10) grand unification, requires a choice of the gauge group itself, a choice of three for the number of families, a choice of Higgs scalars and a large set of couplings. Even in the simplest non-supersymmetric SU(5) model (apart from being ruled out experimentally), the number of in principle observable parameters actually increases because of quark-lepton mixing angles. In addition, SU(5) Grand Unification gave the first indication of something even more disturbing for the idea of uniqueness. The standard SU(5) breaking using a Higgs in the representation (24) allows two distinct classes of vacua, one with the correct gauge group SU(3) × SU(2) × U(1), and one with the wrong one, SU(4) × U(1). This raised the possibility that some of the features of the standard model that perhaps we would like to explain is actually determined by a vacuum choice in the early universe. A choice that could equally well have been different, and that, perhaps, cannot be computed from a fundamental principle. I remember realizing (some time in the late seventies) that the same could be true for most or all parameters of the standard model, and I also remember that I was rather shocked by that possibility.

It seems pretty clear that fundamental uniqueness cannot be achieved if we limit ourselves to Yang-Mills theory. Unless something was overlooked (which of course can never be excluded), the only remaining hope is gravity. General Relativity certainly does seem to have an air of uniqueness, and indeed this was undoubtedly the dominant motivation for Einstein’s quest for a “Unified Field Theory”. On closer inspection, though, this uniqueness is spoiled by an infinite series of powers of the curvature tensor that one can add to the action with a priori undetermined parameters. And there is one more such parameter, the cosmological constant, a term of zeroth order in curvature. Furthermore, from a classical point of view one would not expect gravity to have anything to say about the standard model. For classical gravity, this is just "matter", and the only requirement imposed on it is that it should have a conserved energy-momentum tensor.

There are reasons to believe that in Quantum Gravity this could be different. Quantum corrections due to all the matter also contribute naively divergent contributions to the parameters of gravity. Therefore to tame the divergences, one may have to have control not only over the gravitational but also over the matter contributions.

Although some people had this insight in the early eighties, for most it arrived in a different way, namely from string theory. String theory had become a candidate theory of quantum gravity around 1975, but for almost a decade it did not seem capable of incorporating one of the most striking features of standard model matter: chirality. That changed in 1984, with the discovery of the Green-Schwarz anomaly cancellation mechanism and the construction of the heterotic string. This theory lives in ten, and not in four space-time dimensions, and it is not quite unique. There are two heterotic strings, three other superstrings and a handful of non-supersymmetric strings in ten dimensions. If we ignore these problems for a moment, it does seem close to fulfilling the dream of unification and uniqueness. Everything we know today suggests that it unifies all matter and all interactions with gravity, that it is perturbatively finite and that all higher order gravity interactions are fixed and computable. The cosmological constant is a computable number (zero for superstrings, non-zero and one-loop finite in the only non-supersymmetric tachyon-free theory). Furthermore any attempt to add or remove interactions to this theory leads to inconsistencies. For a ten-dimensional observer this would be the nearly perfect realization of what some four-dimensional observers are hoping for. Furthermore the ten-dimensional non-uniqueness problems was solved ten years later with the discovery of string dualities, relating all the supersymmetric theories to each other and to a non-string theory in 11 dimensions (the status of the non-supersymmetric strings is less clear).

But to arrive to theory in four dimensions one either had to "compactify" six dimensions on a suitable manifold, or generalize the ten-dimensional construction to four dimensions. The former approach required manifolds of special type, so-called Calabi-Yau manifolds, and it became clear quite rapidly that there are large numbers of such manifolds. This number explodes even further if one considers the additional possibility of torsion, as Strominger did in 1986. Direct four-dimensional constructions also started in 1986, and also pointed in the direction of a huge number of possibilities. In particular, there were constructions with world-sheet fermions pioneered by Kawai, Tye and Llewellen and Antoniadis, Bachas and Kounnas, and a bosonic construction that I worked on in collaboration with Wolfgang Lerche and Dieter Lüst. Already in 1985 Narain had pointed out that a continuous infinity of four-dimensional strings could be constructed, but his method led to string theories with too much supersymmetry and no chiral fermions. After it had been demonstrated that Narain's construction could be viewed as a generalized torus compactification, it was not seen as a threat to uniqueness anymore. The explosion of possibilities that emerged in 1986 was a more serious problem. These theories could have chiral spectra, and examples with one supersymmetry, or none, could be constructed.

The author of these 1986 papers commented in rather different ways on their results. Strominger wrote “all predictive power seems to have been lost", and then concludes that a dynamical principle to select the right string theory is badly needed. Kawai, Tye and Llewellen took the point of view that the right string theory should be selected by phenomenological requirements, and express the belief that a complete classification is a tractable problem. Antoniadis, Bachas and Kounnas wrote that the number is so huge that classification is "impractical and not very illuminating". Lerche, Lüst and myself tried to make an estimate for the number of models and came up with the number 101500, and conclude that "even if all that string theory could achieve would be a completely finite theory of gravity and all other interactions, with no further restrictions on gauge groups and representations, it would be a considerable success". This phrase is followed by a kind of “swampland" argument, stating that still not everything is possible.

In subsequent years a few important insights emerged. It was realized that the various constructions were not as distinct as they seemed, and that they could presumably be viewed as different "ground states" of string theory. It became clear that the four-dimensional string constructions, just as the geometric Calabi-Yau constructions, had continuous parameters (”moduli"), corresponding to flat directions in a potential, a fact that was not understood initially. Most importantly, it became clear that all parameters in the standard model (or any other gauge theory) are determined by the values of these moduli. This is usually stated as follows: String Theory has no free parameters. All parameters of low-energy theories correspond to vacuum expectation values of scalars. This also offered an escape to those people that were still hoping for a unique outcome. Flat directions in the potential of the scalar fields in our universe are not acceptable. They imply the existence of massless scalars, that are not observed. Furthermore most four-dimensional theories were supersymmetric (and the ones that were not had unacceptably large values for the cosmological constant and divergent quantum corrections at second order in perturbation theory). Supersymmetry has to be broken. This will typically lift the flat directions of the moduli and replace them by a non-zero potential that often has its minimum at infinity. This leads to a runaway behavior of the moduli, which is also unacceptable. The challenge was to break supersymmetry and find a potential that stabilized the moduli. This did not seem like an easy task, and led to the hope that there might be very few such vacua.

In the period 1986-2000 one can distinguish two different attitudes to the string vacuum proliferation. Some people continued hoping for or believing in a unique outcome, either due to an understanding of the moduli stabilization problem, or from some yet to be discovered selection criterion. Others adopted a pragmatic point of view. The correct vacuum was to be determined simply by requiring agreement with experimental data. This should be a strong enough constraint to select a unique vacuum. The existence of other vacua would be irrelevant; only our own universe matters.

After the aforementioned work from 1986 I was forced to redefine my expectations about String Theory. During a brief period I was carried away by the initial euphoria of 1984. Indeed, despite the fact that compactifications did not seem to lead to a unique answer right from the start, there was a feeling that soon everything, included all quark and lepton masses, would be derived from string theory. By 1986 that had become an unreasonable expectation. When I started thinking about this, I arrived at a conclusion that was different from the ones stated above. I concluded not only that the ground state was going to be highly non-unique, but that this was the only sensible outcome, if string theory was correct.

Anthropic regions in the space of gauge theories

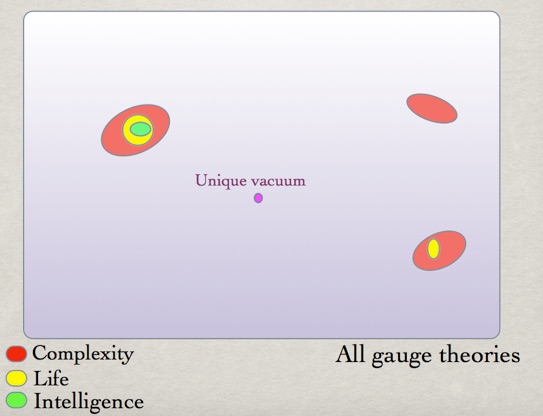

The argument goes like this. Consider the space of all gauge theories coupled to arbitrary matter. This is a huge space, with discrete and continuous parameters. We are going to consider two “gedanken calculations". These are calculations we cannot really do, but for which we can make plausible guesses about the result. The first computation is the possible existence of observers, or intelligent life, in a universe governed by a given gauge theory. This is a “gedanken" calculation because we are technically unable to do it. We certainly would not be able at present to derive the existence of life from the standard model Lagrangian. Even the definition of life is a problem. However, it seems reasonable to assume that some level of complexity of the spectrum is necessary for life, and on the other hand complexity is something that can be defined and computed, at least in principle.

So one can at least imagine drawing contours of some appropriately defined complexity function on the space of all gauge theories. Each such contour divides the space into two regions, one with sufficient and one with too little complexity for intelligent life to exist. Both regions are non-empty: it is not hard to construct gauge theories that have completely uninteresting and hence “lifeless" spectra, (such as a single U(1) coupling to a single charged particle) and obviously the Standard Model provides an example of sufficient complexity. The computation would involve strong interaction gauge dynamics, nuclear physics and chemistry for some gauge theory. It is not doable in practice, but well-defined in principle.

Now consider the second gedanken computation. It would be to compute the unique gauge theory resulting from some unified theory. This is a gedanken computation because no such theory is known, but let us take string theory as an example. If it had, for example, a unique ground state with all moduli stabilized, finding it might involve something like solving complicated inequalities derived from properties of Calabi-Yau manifolds. It would be a purely mathematical computation.

The problem is now that the unique result of the second computation has to fall within the contour of sufficient complexity of the first, even though these appear to be completely unrelated computations. This problem becomes more serious if the region inside the contour is small. Anthropic coincidences would suggest that it is indeed small. I have used the assumption of “uniqueness" above just to clarify the argument. One may interpret this as “very small". To make this a bit more quantitative, suppose for some choice of boundary, measure and complexity function the fraction of the space of gauge theories that is sufficiently complex is x. With a unique ground state, the chance of not ending up in this region is (1-x), assuming the result of the second gedanken computation could have been any point on the space with equal probability. With N ground states, this chance is (1-x)^N. Obviously, this chance becomes small if N >> 1/x. To be precise, define M=Nx, the factor by which the number of ground states exceeds the inverse of the relative size of the complexity region. Then the change of missing a region with life and observers goes as e-M. If we put in some numbers currently being discussed in connection with the string theory landscape, we get the following. Including the cosmological constant and assuming some anthropic fine-tunings in the standard model, x might be something like 10-140. For N=10500, the chance of having only lifeless universes is fantastically small: exp(-10360).

The puzzle is therefore how two such seemingly unrelated computations could match. One may distinguish five classes of ways out of this dilemma, namely

-

Life is abundant, and the ground state is unique.

Life is abundant, and the ground state is unique. -

Life is abundant and there is a discretuum of ground states.

Life is abundant and there is a discretuum of ground states. -

Life is rare, the vacuum is unique, and we will never know why these

Life is rare, the vacuum is unique, and we will never know why these

computations match. -

Life is rare, the vacuum is unique, but there is a mysterious relation between

Life is rare, the vacuum is unique, but there is a mysterious relation between

the two gedanken calculations. -

Life is rare, but there is a discretuum of ground states.

Life is rare, but there is a discretuum of ground states.

The first option would imply that the chance that the mathematically unique universe is lifeless is (1-x), a number that would never become extremely small. Even if half of all possible gauge theories allow complexity and life (which looks like a preposterous assumption), we would still be left with the worrisome conclusion that we have had a 50% chance of not existing. But perhaps we could live with that.

The second option is overkill, and probably not favored by anyone. The third option would presumably be considered unacceptable by most scientists, and hence if one prefers both a unique ground state and a scientific explanation one is forced into option 4. Mysterious relations between seemingly distinct computations have occurred before. String dualities or the AdS/CFT correspondence are spectacular examples. But here we need a relation between pure mathematics on the one hand, and nuclear physics and chemistry on the other, relating discrete points to regions in contour plots. This looks like an absurdity to me. Option five looks like the most attractive one by far.

Why now?

An often heard objection against using the anthropic principle is that it is like giving up on finding an explanation in terms of some physical mechanism. On the one hand, invoking the anthropic principle now may be premature; on the other hand, if it had been invoked in the past, we might never have made some of our most important discoveries. Consider the following caricature of anthropic reasoning. After Newton had derived the planetary orbits from a 1/r2 force, he could have looked for a fundamental reason why the inverse power was 2, and not any other number. Let us imagine for the sake of the argument that he had no idea about that (although that would not be quite correct, because already at that time the relation between spherical propagation and inverse square fall-off was known, at least for the propagation of light). It should have been easy for Newton to find out that any deviation from 2 would lead to inward or outward spiraling orbits. Apart from noticing that this is in disagreement with observation, he might have argued that any value different from two by even a small amount would make the existence of life for an extended period of time impossible. If he really viewed the power as a free parameter, he might then have argued that for a value so close to 2 to occur naturally, a continuum, or perhaps even a dense discretuum had to exist in nature, and we just happened to live in a solar system (or universe) where the value was very close to 2.

This example stretches the imagination for a number of reasons. First of all, an argument like this would not appear to fit at all in Newton's world view or that of his contemporaries. If we distort history and imagine that people had already discovered and accepted evolution at that time, it already becomes somewhat easier to imagine that someone might arrive at such a conclusion. If furthermore the observed power was not 2, but for example 2.3, and if indeed stable orbits could be shown to exist only for precisely that power[1], an anthropic argument might seem more tempting. But one essential ingredient would still be missing. If someone discovered a consistent theory of gravity where the power was a free parameter, and which was equally consistent for any value of that parameter, it seems impossible to escape from the conclusion that the reason we observe the value 2.3 is that for any other value there would be no observers. And finally one more ingredient would probably be needed to make us feel comfortable with that conclusion, namely a mechanism that allows different values to be selected at different places and/or times.

Let us compare this caricature with two cases for which anthropic arguments are invoked today, namely the cosmological constant and the structure and the parameters of the standard model. To start with the latter case, neither the group structure nor the parameters of the standard model look very much like mathematically preferred values, unlike the “2" of the power law. But much more importantly, we have consistent theories for most of the discrete choices (groups and representations) and parameter values: gauge theories. One may worry that gauge theories might only be a low energy effective approximation to something more fundamental. Indeed, for any given gauge theory (choice of groups, representations and parameters) we do not know if it has a ultraviolet completion, in other words if it remains consistent at arbitrarily short distances. But that does not matter for the arguments used above. Indeed, the most important feature of gauge theories is their renormalizability. This means precisely that they are valid regardless of the precise ultraviolet physics. Therefore we can discuss the low energy properties (such as nuclear physics and chemistry) of any gauge theory in a meaningful way, even without knowing whether it has a well-defined ultraviolet completion. We can define functions on the space of all gauge theories that are relevant for observers to exist, such as nuclear and chemical complexity.

It is hard to think of another moment in the history of physics where a similar situation occurred, namely that a large number of equally sensible and well-defined alternatives exists for the known laws of physics that are relevant for the existence of life. Clearly this was not the case with Newton’s inverse square law. We do not know sensible alternatives to Quantum Mechanics or General Relativity. The periodic system of elements is clearly relevant for the existence of life, but there is no alternative to it that could be a starting point of an anthropic discussion. Indeed, sensible alternatives to nuclear physics and chemistry can be formulated, but only by reducing them to the standard model, and then modifying the latter. Only if we go back to the time when the orbits of the planets were still considered fundamental parameters do we encounter a similar situation, and indeed the anthropic argument was correct in that case.

Of course this argument becomes meaningless, and irrelevant, once we have insight in the existence or non-existence of ultraviolet completions (or any other consistency conditions) for gauge theories. I am just using it here to guess the answer before having that insight.

Anthropic Fine Tuning:

Standard Model versus Cosmological Constant

Now consider the cosmological constant. There are three important differences with regard to anthropic considerations for the cosmological constant versus the choice of gauge theory.

-

The anthropic case is much clearer for the cosmological constant.

The anthropic case is much clearer for the cosmological constant. -

The cosmological constant is in more danger of having a mathematically

The cosmological constant is in more danger of having a mathematically

unique value. -

Ultraviolet decoupling is more worrisome for the cosmological constant.

Ultraviolet decoupling is more worrisome for the cosmological constant.

The first point is obvious: only ridiculously small values of the cosmological constant allow observers to exist. One may argue about factors of 10, but on a scale of 120 orders of magnitude this is irrelevant, at least qualitatively. However, a danger that is still hovering above the anthropic explanation of the value of the cosmological constant is the fact that the value zero is within the anthropic window. Even though neither string theory (or any other idea for a theory of quantum gravity that has been discussed so far) nor standard model physics seems to treat this value as special (only late time cosmology does), there remains a possibility that we have missed something. The fact that current observations suggest a very small but non-zero value for the cosmological constant makes the scenario of having an exactly vanishing value unappealing, but it is not completely ruled out. The observations could be wrong or misinterpreted, or what has been observed could be due to some quintessence-like phenomenon. Regarding the third point: the problem here is that the cosmological constant should perhaps be viewed primarily as a parameter of a theory of gravity. Hence a fundamental theory of quantum gravity may have something to say about its value. If such a theory simply forbids a cosmological constant (even though it is allowed classically), the entire anthropic argument becomes moot. We had no right to even discuss non-zero values in the first place. Decoupling arguments are implicitly invoked to argue, for example, that already standard model field theory contributions to the cosmological constant are sixty orders of magnitude larger than the observed value. This seems plausible, but there is a small danger of a non-decoupling gravitational tuning mechanism that simply readjusts the cosmological constant to zero, even if no such mechanism has ever been found in a realistic model.

Anthropic principles

A problem with the “anthropic principle" is that many versions have been proposed during the last decades. Therefore it is not always clear what exactly some people find unacceptable about it. The following is a check list of statements that one may or may not agree with. Stated briefly, they are:

-

The standard model is not the unique mathematical solution of anything.

The standard model is not the unique mathematical solution of anything. -

Not all alternative solutions allow observers.

Not all alternative solutions allow observers. -

The number of alternative solutions is sufficiently large to make the existence

The number of alternative solutions is sufficiently large to make the existence

of a solution with observers plausible. -

We live in the most probable universe which allows observers.

We live in the most probable universe which allows observers.

The first statement simply says that the fundamental equations that have the standard model as a solution, will have other solutions as well. Here “solutions" can be stable or metastable ground states, or solutions to consistency conditions, or the maxima of some probability distribution, or other conditions that can be formulated in purely mathematical terms, without invoking the existence of observers. By “standard model" I mean not only the gauge theory and its parameters, but also any cosmological parameter that comes with the solution, such as the life-time and the cosmological constant. The word “fundamental" is used here to rule out intermediate theories, which may be subject to further constraints. In other words, by accepting point 1, one abandons all further hope of fully determining the standard model from purely

mathematical constraints. Nevertheless, I have the impression that nowadays few people would strongly disagree with this statement. This is noteworthy in itself, because it seems to me that just a few years ago there would still be strong resistance to such a notion. Some people try to escape from this statement by adding unstated anthropic modifications to it. For example, one may add “non-supersymmetric" to the conditions, pretending that this is just a mathematical criterion. Or one may require a metastable vacuum to be long-lived. If one accepts the existence of additional supersymmetric or short-lived solutions in addition to the standard model, one has already partly surrendered to the anthropic principle. However most people would presumably even agree that the standard model solution is not likely to be the unique non-supersymmetric long-lived one.

The second point states that among the solutions there is at least one that is clearly outside the anthropic region in parameter space. Agreeing with this point implies accepting the anthropic principle in its weakest possible form. At least it tells us then why we observe parameters that lie within the anthropic region, statement hat would be meaningless if all solutions are within that region. The third statement is just a matter of enlarging the number of solutions. One may disagree with it, but there is no reason why anyone who has already accepted point 2 would have strong objections to it. In fact, once one has accepted some role for the anthropic principle, point 3 is the more attractive option, because the large number of solutions solves a problem: understanding the anthropic fine-tuning of parameters. Otherwise we would just have a seemingly useless set of additional solutions, and we end up with neither a unique solution, nor a sensible explanation for anthropic coincidences.

Point four is the most problematic one. It inevitably enters the discussion as soon as the number of solutions becomes so large that there is more than one satisfying the anthropic constraints. If point three holds, this is nearly unavoidable: having a unique anthropic solution would in itself constitute a bizarre fine-tuning. One would of course like to find a way of selecting (other than by experimental means) a solution out of the set of anthropic ones. Since by assumption all are already valid solutions of the fundamental theory, the only possibility that is left is to define some sort of probability, based on number densities of solutions, cosmological selection, or the likelihood or abundance of life. It is not always easy to define some of these quantities, and even if that can be done, it is essentially impossible to compute some of them. For example, to carry this out completely one would have to find some way of comparing universes based on entirely different gauge theories, and determine not only that observers can exist in both, but also how easily and how abundantly they are formed. And even if that can be done, there is still a fundamental problem that is unsolvable, namely that we observe only a single universe out of the entire ensemble. Hence we may simply find that we are unlikely, and there is nothing we will be able to do about that.

Why is this better than gauge theory?

We already know that we can write down an infinity of gauge theories. Now we found that also in String Theory there is a huge number of possibilities. So what is new? The difference is that gauge theory is nothing but a parametrization of

all possibilities allowed by a set of symmetries. The Landscape, on the other hand, is supposed to be the complete set of gauge theories allowed by a fundamental theory. There is not going to be another theory on top of this that makes a further selection. This is the supposed to be the ultimate answer.

Of course we do not know if that is true for the current String Theory Landscape, but it is of interest only in that case. To put it differently, a hypothetical theory that is more fundamental than String Theory and contains it, perhaps as a special case, would be regarded as the ultimate goal of string theorists anyway.

This is precisely what happened with “M-theory”.

Fundamental Theory?

Is the whole concept of a fundamental theory perhaps too naive? Perhaps,

but there is already an examples that would satisfy my expectations. QCD

seems the perfect theory of the strong interactions. It is “fundamental” in the sense that we would not expect to discover strong interaction phenomena that cannot be described by it. In the end, this is of course an experimental issue, and always will be. It would however be shocking if someone discovered a feature of,

for example, the quark gluon plasma that could not be correctly described by QCD. There is no concept of “beyond QCD”, unlike “beyond perturbation theory” or “beyond the standard model”. Theoretically, the only new features that are still

allowed are additional colored matter or higher dimensional operators, both of which are better described as “beyond the standard model” than “beyond QCD”.

Apart from this, we know that our current theory of QCD remain valid (as a theory) for arbitrarily short distances, because of asymptotic freedom.

This example also illustrates what a fundamental theory is not. There are not many quantities we can compute in QCD, and certainly not with fantastic accuracy, the mathematical foundations of the theory and especially a proof of confinement are still not well estabished, and we have only a limited number of rather crude tools at our disposal. All of this may change in the future, and we may in fact arrive at a completely different way of describing the theory, but there are good reasons to be believe that the currently known theory of QCD is fundamentally the correct one, and that whatever other description we may find can be derived from it.

The same is true for the other two standard model interactions, apart from

two problems: the Higgs mechanism and the existence of a Landau pole in

the ultraviolet behavior of the U(1) coupling.

So is it unreasonable to hope for a similar fundamental theory of quantum gravity? The traditional particle physics point of view is that gravity is merely

just another interaction, mediated by a spin-2 instead of a spin-1 particle. If we are able to find theories for the spin-1 interaction that might well be called

“fundamental”, then why not for the only known one that is still missing?

[1] This would still make the power a mathematically unique value, but this is as far as we can stretch this example.

The Landscape

The String Theory Landscape is one of the most important and least appreciated discoveries of the last decades.

It implies a fundamental non-uniqueness of the gauge theory underlying the Standard Model. This shatters a dream of several generations of physicists. For that reason alone, it is important.

String Theory has been pointing us in this direction for a long time, but even before the re-emergence of String Theory it could have been understood that the dream was misguided, because it overlooked anthropic coincidences.

Inspired by early results from String compactifications, I have been defending that point of view for a long time, for the first time in writing in a speech in 1998.

The argument expressed in that speech went quite a bit further than saying “the number of string vacua appears to be large, and therefore we will eventually need to invoke the anthropic principle”. My point of view was then, and still is now, that a huge number of gauge theory vacua is a requirement for a fundamental theory in order to understand anthropic coincidences.

Having worked on String Theory for more than twenty years now, I am very pleased to see that it seems to satisfy that requirement. It gives me a strong feeling that we might be on the right track, even if it is not an easy track.

The notes on this page are an expanded version of the first part of several talks I gave recently (in Göteborg, Berlin and London). A pdf version of the slides of that talk can be found here.

The slides of a colloquium in Hamburg can be found here.

The slides of a talk at the Dutch Theoretical Physics meeting in Dalfsen, 14 May 2009, are here. There is also a keynote version with animations.

Part of these notes are inspired by reactions I got during these talks. The reactions to this kind of ideas have always been very puzzling, and have evolved in an interesting way.

Peter Zerwas asked me to publish a written version of the Hamburg talk in Reports in Progress of Physics. The reference is

Rept.Prog.Phys.71:072201,2008. It is available electronically via this link. While writing this up I ended up with far more material than I ever thought possible, and I decided to make the full version available via the e-print arxiv.

I decided to give the Hamburg talk and the papers the title “The Emperor’s Last Clothes?”. This refers on the one hand to the eternal stupidity of mankind in thinking that what we see must be all there is or can be, and on the other hand to the denial of the obvious until it cannot be denied anymore -- at which point everyone will claim that it was obvious. The word “last” refers to the hope for a final theory, whatever that might mean. I am not at all opposed to such a notion, but a lot of time will be needed to see if the question mark can be replaced by an exclamation mark.

Unfortunately some people try to take the attitude that they have always known that the emperor of uniqueness had no clothes, but that they did not find it worth mentioning. I think those people simply did not get it, or are still in a denial phase, holding on to some last straw that they will mention only implicitly (“stabilizing all moduli is very hard”). I have a lot more respect for people who disagree with me on this issue, or who change their minds in an open and honest way.

Here is a good example of the latter.

Recently, E. Witten discussed his changing views on the landscape in his acceptance speech of the Lorentz Medal, awarded by the KNAW, the Dutch Royal Academy of Science. The theme of his talk was how string theory has forced us to change our minds about certain things. The last of these things was uniqueness, about which he said:

There possibly has been another major change in the interpretation of the theory in roughly the last decade ... but it is hard to know.

This speech should be of interest to anyone who tries to pretend that nothing has happened. Although Witten did not choose sides on this issue, he does agree that it is important:

If the “landscape” of string vacua

is the right concept for describing the real Universe, it is yet another big shift in our interpretation of the theory.

The two quotes above are from the pdf files of his speech, which is available here. The spoken text can be obtained here.

I was pleased to see that during his talk he recommended my paper “The Emperor’s Last Clothes?” for further reading.

The text below is essentially an early version of that paper.

For more details see the extended version of my review article written for Rev. Mod. Phys.